I'm sure we all have tasks that involve running many commands to do a simple task, that is hard to remember and get right every time or is just annoying to have to do it. One that I run into a lot is taking production files and database and syncing them down to a staging environment or the opposite for a site launch. That task isn't really hard, but it involves running a handful of commands and going into two different servers. That is just one example of the many tasks I have to do daily on servers, which is why I have created many bash scripts to do simple task for me like, spin up new docker containers, sync databases across environments, and backup important directories.

To get started all you need to know about bash scripts is, it can be as simple as a bunch bash commands that you type in the server strung together into a singe file. You can get more complicated taking user input and looping over things but you don't have to.

Your First Bash Script

To get started lets do the classic "Hello World" example, you need to create a new file on your computer called whatever, I called mine learning-bash.sh then put the following in it.

#!/bin/bash

echo "Hello World"The hash exclamation mark #! character sequence is referred to as the Shebang. Following it is the path to the interpreter that should be used to run the rest of the lines in the text file. After that you have the lines of code that we are running, for this example we are just running one line that echos out "Hello World".

So now you're ready to run your script and see if it works, but first you need to tell your system that file is allowed to be executed. From the command line to do that you run chmod +x learning-bash.sh which adds the executable permission to that file. Now you can run that file by just giving your terminal the path to the file ./learning-bash.sh and you will get the following output.

Great you made your first script! Now lets move onto something much more useful.

Syncing Databases Across Environments

Below is only a slightly edited version of the bash script I have running on a staging server that lets me quickly import and export database across all of our databases at Code Koalas it saves me hours of time each month, you can also get a gist of it.

#!/bin/bash

echo "What do you want to do? (import/export)"

read dbtype

echo "What environment do you want? (dev/stage/prod)"

read environment

echo "What is the database name?"

read databasename

if [ "$environment" == "dev" ]; then

dbhost='dev-db-host'

elif [ "$environment" == "stage" ]; then

dbhost='stage-db-host'

elif [ "$environment" == "prod" ]; then

dbhost='prod-db-host'

else

dbhost=$environment

echo "Using Custom DB Host";

fi

if [ "$dbtype" == "import" ]; then

echo "What is the filename (give full path to file if not in this directory)"

read databasefile

if [ "$environment" == "prod" ]; then

echo "Since it's prod we are dumping db before import to $databasename-backup.sql"

mysqldump -h $dbhost $databasename > $databasename-backup.sql

fi

echo "Importing DB";

mysql -h $dbhost $databasename < $databasefile

elif [ "$dbtype" == "export" ]; then

echo "Dumping DB to $databasename.sql"

mysqldump -h $dbhost $databasename > $databasename-backup.sql

fi

echo "Have a great day!"So there is a lot there so here a brief explanation of what is happening then I can break down each section. First we establish what task, environment, and database we are dealing with here, that does make this script more complex but that's nicer that a different script for each task and each environment. Programmed in here are the environments we generally want to talk to so the user can type dev and it completes to the actual hostname for dev, but if you don't type a known environment we just try the environment you typed in. Next if we are importing, we ask the user for the file to import, and if they're importing to prod we backup that prod database first. If we were exporting we just export that database to a file.

The script isn't too crazy and it's not something you cannot do by hand every time. The main thing I wanted to accomplish here is not having to copy and paste the hostnames every time as they're very long. So lets break down the commands we are using here.

echo "What do you want to do? (import/export)"

read dbtypeFirst is an echo which outputs to the user, we've seen it before nothing too special there. After that we have read dbtype with the read command your script will wait for user input and take that input and set it to the variable of dbtype.

if [ "$environment" == "dev" ]; then

dbhost='dev-db-host'

elif [ "$environment" == "stage" ]; then

dbhost='stage-db-host'

elif [ "$environment" == "prod" ]; then

dbhost='prod-db-host'

else

dbhost=$environment

echo "Using Custom DB Host";

fiNext this big chunk of code is checking the user input to decide what environment we need to connect to. if [ CONDITION ]; then is the syntax of if statements in bash. So we check if "$environment" == "dev" if so we set a new variable of dbhost='dev-db-host' which will get used later in the code. That's most of this chunk but you will see the else if syntax is elif and to end your if check you use fi.

if [ "$dbtype" == "import" ]; then

echo "What is the filename (give full path to file if not in this directory)"

read databasefile

if [ "$environment" == "prod" ]; then

echo "Since it's prod we are dumping db before import to $databasename-backup.sql"

mysqldump -h $dbhost $databasename > $databasename-backup.sql

fi

echo "Importing DB";

mysql -h $dbhost $databasename < $databasefile

elif [ "$dbtype" == "export" ]; then

echo "Dumping DB to $databasename.sql"

mysqldump -h $dbhost $databasename > $databasename-backup.sql

fiNow that you've already seen how ifs work this section shouldn't look as scary. Based on dbtype of import or export we run a couple different MySQL commands. If it's an import we echo out asking for the path of the import file, once we have that if it's prod we backup first (always backup prod!), if not we run mysql -h $dbhost $databasename < $databasefile which is the mysql command to import a database from a file. If we were running the export we run mysqldump -h $dbhost $databasename > $databasename-backup.sql which is the MySQL command to dump a database to a file.

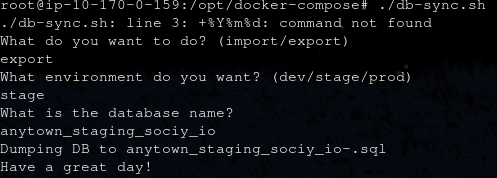

This file is pretty simple and powerful, the most powerful thing it's done for me is give others the confidence needed to get their own database dumps from the staging server instead of having to ask me every time. It also makes it much faster for me to move databases around when I do have to do it myself, and I no longer have to worry about writing my MySQL command backwards and killing a database. Here's the script in action.

Wrapping Up

Bash scripts is really just the tip of the automation iceberg, but don't underestimate their power or usefulness. Just recently I was impressed with how easy it was to spin up a site on production which involves, copying database, files, creating the entry in docker-compose, creating file structure, moving files, creating the database and importing all from a single command I wrote months ago. That script took a hour process and made it ten minutes.

I hope this post was able to give you the knowledge you need to get started making your own scripts and saving yourself time to do more important things like play Super Mario Maker 2, or travel or whatever it is you do.