There are many cases in which getting code to your server needs to be more than just a git pull every 15 minutes or so. This is where building and integrating pipelines come in to play. It’s worth mentioning that there are zero cases where files FTP’d up to the server is the right solution. Some reasons to use a pipeline are:

- You don’t want to track compiled files or community contributed files in your git repo

- You’re using something like composer and don’t want php versions or extension conflicts between environments

- You want to have your site to run in a Docker container.

If those reasons sound like your situation, you should probably look into getting a pipeline running for your project.

What Are Pipelines?

Simply put, pipelines are scripts that run based on triggers that happened in your git repo. For example, you can run a linter every time code gets merged into master, to make sure no one broke everything before letting that code go to the server.

The real definition of what a pipeline is:

- Jobs that define what to run. For example, code compilation, tests, building docker containers

- Stages that define when and how to run. For example, that tests run only after code compilation

Simple pipeline example

build, with a job calledcompiletest, with two jobs calledphp-testsandvisual-regressiondeploy, with a jobs calledbuild-devandbuild-production

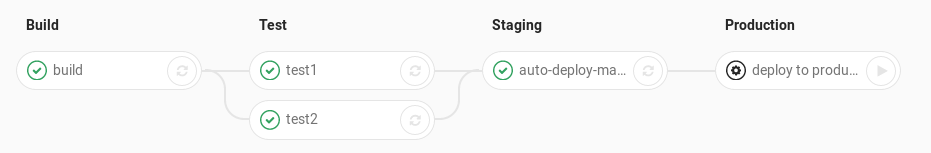

Visualizing pipelines

It is complex, until you see it running. Luckily most CI/CD services have a visual representation of what the pipelines look like and the order they go in. If there are any errors it will highlight what step of the pipeline failed and show the errors to hopefully make it easier to fix the issue. The visualization for gitlab is what you see below.

Building Our Pipelines

At Code Koalas we use gitlab, so all the examples I’ll be showing are for gitlab, but I’ve easily converted the same concepts to both bitbucket and TeamCity. To build a pipeline in gitlab you create a .gitlab-ci.yml in the root of your repo and that's where you configure all the things you need your pipeline to do.

For our Drupal sites we run a pretty simple pipeline that runs a lint, then if that lint passes we composer install the site, compile the theme, build a docker container and push it up.

You can see our base .gitlab-ci.yml in our base Drupal composer install we have in github. A minimized version of it is below:

variables:

IMAGE_TAG_RELEASE: $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

stages:

- lint

- deploy

lint:

stage: lint

image: donniewest/drupal-node-container:latest

only:

- dev

script:

- cd docroot/themes/custom/THEMENAME && npm install && npm run lint

build-dev:

image: docker:latest

services:

- docker:18-dind

stage: deploy

only:

- master

script:

- docker run -v $PWD:/var/www/html donniewest/drupal-node-container:latest /bin/bash -c "composer install && cd ./docroot/themes/custom/THEMENAME/ && npm install && npm run build && npm run compile"

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker build -t $IMAGE_TAG_RELEASE ./

- docker push $IMAGE_TAG_RELEASE

build-production:

image: docker:latest

services:

- docker:18-dind

stage: deploy

only:

- /^RELEASE-[\.\d]*/

except:

- branches

script:

- docker run -v $PWD:/var/www/html donniewest/drupal-node-container:latest /bin/bash -c "composer install && cd ./docroot/themes/custom/THEMENAME/ && npm install && npm run build && npm run compile"

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker build -t $CI_REGISTRY_IMAGE:production ./

- docker push $CI_REGISTRY_IMAGE:productionBreaking down the pipeline

Looking at that file all at once without context could be daunting, but there are really just two steps we need to look at. We will look at the Lint Stage, and the Deploy Stage. The rest is fairly boilerplate code.

Lint Stage

The main point of our Lint Stage is to confirm that our theme still passes all linting.

lint:

stage: lint

image: donniewest/drupal-node-container:latest

only:

- dev

script:

- cd docroot/themes/custom/THEMENAME && npm install && npm run lint

Here we are using a custom docker image that is extending the default Drupal image and adding node to it so we can compile a theme. You can view it on github as drupal-node-container. The “only dev” means this will only run on the dev branch. For a lint you might actually want to remove that line in total so the lint runs on every commit pushed, so you lint before you make a merge. Then the “script” section defines what code we want this to run. Here it goes into the them and runs npm install and build related commands.

Deploy Stage

The main point of the deploy stage is to install the composer dependencies, build and compress the theme, then build the Docker image that holds our site.

build-production:

image: docker:latest

services:

- docker:18-dind

stage: deploy

only:

- /^RELEASE-[\.\d]*/

except:

- branches

script:

- docker run -v $PWD:/var/www/html donniewest/drupal-node-container:latest /bin/bash -c "composer install && cd ./docroot/themes/custom/THEMENAME/ && npm install && npm run build && npm run compile"

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker build -t $CI_REGISTRY_IMAGE:production ./

- docker push $CI_REGISTRY_IMAGE:productionThe deploy stage is a little different because we are running the docker image of dind which is Docker in Docker, meaning we are running and building docker containers inside our docker container. The only section here runs a bit of regex /^RELEASE-[.\d]*/ which will return anything with the name "RELEASE". We use the format of RELEASE-X.X.X for tags and that bit lets us deploy those tags. On the next line the "expect" is actually blocking this from running on branches locking it down to only tags, which is what we want here.

The Script section runs a few more commands than our linting scripts do. Using the same Drupal Node Container, we run a composer install then theme building commands. Then from there we build and push our new docker image tagged production.

Deploying our new Image

That is the end of our pipeline in gitlab. The next step is to take the docker image we built and deploy it to our site. To do that we have a cron task on our server that runs a docker-compose pull && docker-compose up -d every 15 minutes.

Wrapping Up

Pipelines are a great way to level up your code deployment and once you dip your toes in the water you will realize it’s not too hard to get going. I hope this helped shed some light on how to get pipelines working with your projects.